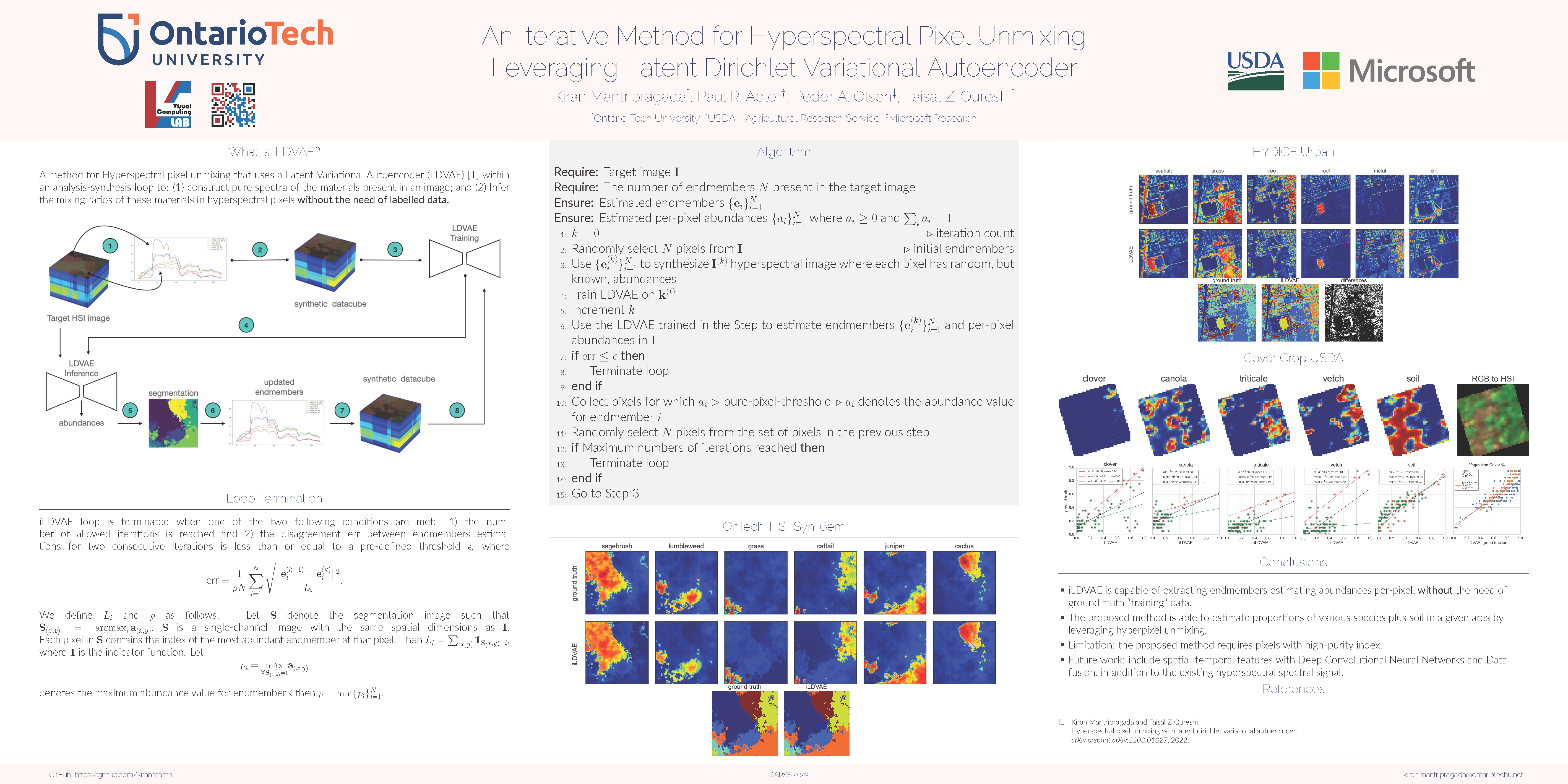

An Iterative Method for Hyperspectral Pixel Unmixing Leveraging Latent Dirichlet Variational Autoencoder

Faculty of Science

University of Ontario Institute of Technology

2000 Simcoe St. N., Oshawa ON L1G 0C5

Introduction

Hyperspectral Images (HSIs) contain an order of magnitude more information than a typical RGB color image. HSI pixels contain hundreds of bands and capture integrated reflectances from materials or objects within the instantenous field-of-view subtended by that pixel. HSI images, therefore, present exciting opportunities for a number of downstream tasks involving materials, objects or scene analysis, segmentation, and classification [1, 2]. Concomittantly, HSI images pose unique challenges in terms of algorithms and system design due to their sheer scale [3]. Oftentimes multiple materials contribute to the observed pixel intensity and in these circumstances a pixel can be thought of as a mixture of these materials where both the materials in question and their mixing ratios are unknown. This is especially true for low-resolution Hyperspectral Images (HSIs) that are captured in a remote sensing setting where each pixel may cover a large region of space [2]. Therefore, in terms of methods and theory for HSI analysis, the problem of pixel unmixing has recieved extensive attention in the hyperspectral research community [4]. Pixel unmixing aims to identify the various materials and extract their mixing ratios represented within a given HSI pixel.1 Pixel unmixing is essential for a number of downstream tasks, such as those that aim to understand the composition, heterogeneity, and proportions of various materials using hyperspectral imaging [3, 5, 6, 7]. Within this context, this paper develops Iterative Latent Dirichlet Variational Autoencoder (ILDVAE), a novel method for hyperspectral pixel unmixing that aims to recover the “pure” spectral signal of each material (hereafter referred to as endmembers) and their mixing ratios (abundances) in a pixel given its spectral signature.

Iterative LDVAE in Action

Iterative LDVAE (iLDVAE) is able to estimate endmembers and per-pixel abundances over time. The followign videos show the quality of segmentation w.r.t. to the ground truth. Note how iLDVAE improves segmentation over successive iterations.

Poster

Datasets

Publication

For technical details please look at the following publications